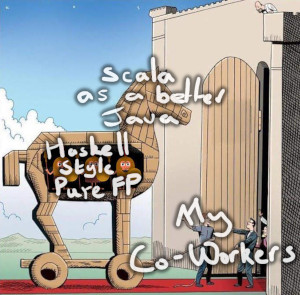

I’ve recently been going through Exercism exercises with a group of Colleagues. The colleagues in question are group of experienced developers, but who generally started with little to no Scala experience.

On the surface, our only goal is to learn some Scala syntax and libraries, by solving some fun problems.

That said, I’m secretly aiming to win them over to joys of my favourite programming style: Purely Functional Programming.

With that in mind, this blog post is about 4 different ways to solve one of the exercises, and what they taught me, not just about Pure FP, but about teaching Pure FP.

The Exercise in question is called “Change”. As described on Exercism, the task in Change is:

Correctly determine the fewest number of coins to be given to a customer such that the sum of the coin’s value would equal the correct amount of change.

In a nutshell, we want to write a function

def findFewestCoins(target: Int, coins: List[Int]): Option[List[Int]]

This should take a target value, and a list of the different coins that we might use, and should return an Option, with a possible list of those coins, that should add up to the value.

Right off the bat, we’ve run into one concept from FP: Our function findFewestCoins is “Partial”, there are some possible sets of coins that can’t be used to make every value.

For example findFewestCoins(target=94, coins=List(5, 10)) doesn’t have an answer.

Because Partial Functions are dangerous we’re using Scala’s Option type to represent a partial function, as a total function with an Option return type; which may return None` for values outside of it’s domain.

Using Option “correctly” can take a while to get used to, for developers without FP experience.

They tend to fall back on the tried and tested technique of writing code that’s liable to throw Null Pointer Exceptions, and then finally wrapping it all in a Try/Catch.

I’m a huge fan of Alexis King’s blog post “Parse, Don’t Validate”,

as an explanation of the correct way to think about Option, although fair warning, it contains a whole bunch of Haskell.

An Approach That Nearly Gets There

An Algorithm for solving the “Change” problem looks pretty simple, let’s dive straight into some code:

object NearlyWorksChange {

// explicitly write out some "useful" results

val noCoins = Some(List.empty[Int])

val failure = None

def findFewestCoins(target: Int, coins: List[Int]): Option[List[Int]] =

(target, coins) match {

// the value 0 is made up from 0 coins

case (0, _) => noCoins

// if we have no "coins" we can't solve the problem

case (_, Nil) => failure

// if the target value is negative, we also can't solve the problem

case (t, _) if t < 0 => failure

// otherwise, we want to investigate 2 possibilities

case (_, h::tail) => {

// Either we do use the first coin

// so we need to know how to make target - it's value

lazy val usingFirstCoin = findFewestCoins(target - h, coins).map(h::_)

// or we don't, so we need to know how to make the target

// without using it

lazy val notUsingFirstCoin = findFewestCoins(target, tail)

// of those two possibilities

// we're interested in the one that involves the fewest coins

List(usingFirstCoin, notUsingFirstCoin).flatten.minByOption(_.length)

}

}

}This is a recursive approach, it has three base cases:

- the value is zero: and the solution is “no coins”

- there are no “candidate” coins: the problem has no solution

- the target value is negative: there’s also no solution

If neither of those cases match, then the solution either does, or doesn’t, involve the first coin on the list. So we can use recursion to solve both of those cases, and then choose the one with the shortest solution.

There’s just one problem with this solution, it’s much too slow.

It passes all of the Exercism test cases, except one.

Change.findFewestCoins(999, List(1, 2, 5, 10, 20, 50, 100))

This doesn’t fail, per say, it just takes an inordinate amount of time, far more than is reasonable.

The problem is, the search space is pretty big, and we’re exploring the entirety of it. More specifically, we’re re-calculating each sub problem every time we run into it. While trying to solve the problem for 999, we wind out re-calculating the solution for 500: “11,390,171 times”.

The State Monad

Fortunately, there’s a drop in solution for the entire category of problems where you need to re-calculate an expensive function very frequently: Memoization

Memoization is an optimization technique where we cache the result of our function calls, using the arguments as a key. And then, when the function is called again with the same arguments, we return those cached results rather than re-calculating them.

We can add Memoization to “Pure Functions” without changing the results of our program, (other than the runtime) because they have no side effects. This is great because I’m able to demonstrate yet another advantage of pure FP to my colleagues.

Since we want to add Memoization, while remaining Purely Functional, one approach is to use the State Monad from the Cats library.

The State Monad can be used to model restricted mutable state, in a purely functional style.

We can think of a value of Cats’ State[S, A] as being similar to a function S => (A, S).

That is, a function that takes some initial state, of type S, and returns an updated state, along with a result of type A.

Thanks to the magic of Cats, we’re able to use for comprehensions to compose individual computations returning State together.

object CatsStateChange {

import cats.data.State

import cats.syntax.all._

// Let's define some type aliases,

// because otherwise our time definitions are going to be really long

// We're storing our cached results in a `Map`

// where the keys are a tuple of the arguments

type Memo = Map[(Int, List[Int]), Option[List[Int]]]

// And that value `Memo` will appear in our `State` Monad

type MemoState[A] = State[Memo, A]

// We need some Initial State to run the Monad.

// At the start of the calculation, we haven't memoized anything yet

// So we can just use an empty Map

val nothingMemoizedYet = Map.empty[(Int, List[Int]), Option[List[Int]]]

// Our two base cases, are `None` or `Some(List.empty) in a `MemoState` Context

val failure = (None :Option[List[Int]]).pure[MemoState]

val noCoins = Option(List.empty[Int]).pure[MemoState]

// The function we're trying to memoize has the type `(Int, List[Int]) => Result`

// This takes a function with those types, and memoizes it via the State Monad

// I find that writing this in a "generic" way, with type parameters

// makes it slightly easier to think about

def memoize[A, R](f: A => State[Map[A, R], R])(a: A): State[Map[A, R], R] = for {

previous <- State.get[Map[A, R]]

result <- previous.get(a) match {

case Some(memoizedResult) => State.pure[Map[A, R], R](memoizedResult)

case None => f(a)

}

_ <- State.modify[Map[A, R]](_.updated(a, result))

} yield result

// This is _more or less_ the same solution we used previously

val findFewestCoinsMemo: ((Int, List[Int])) => MemoState[Option[List[Int]]] =

memoize[(Int, List[Int]), Option[List[Int]]]({

case (0, _) => noCoins

case (_, Nil) => failure

case (t, _) if t < 0 => failure

case (target, h::tail) => for {

usingFirstCoin <- findFewestCoinsMemo(target - h, h::tail)

notUsingFirstCoin <- findFewestCoinsMemo(target, tail)

} yield List(

usingFirstCoin.map(h::_),

notUsingFirstCoin

).flatten.minByOption(_.length)

})(_)

// Finally "run" our memoized function, using an initial empty state

def findFewestCoins(target: Int, candidates: List[Int]): Option[List[Int]] =

findFewestCoinsMemo(target, candidates).run(nothingMemoizedYet).value._2

}With memoization added via the State monad, we pass all of the Exercism test cases.

If I was solving these by myself, I’d probably stop here.

But the point of these exercises is as a learning exercise, and we’ve already introduced two concepts; Option and Memoization.

Trying to explain both of these, and the State Monad, and for-comprehensions in one session is probably too much to introduce in one go.

Let’s try and see if we can pair it back a little.

Philosophical Purity

A mixed blessing associated with programming in Scala, is that the compiler doesn’t enforce purity.

If we want to add side effects or introduce local state, we’re free to do so.

Earlier, we mentioned an advantage of programming with pure functions:

We can safely add Memoization without otherwise changing the behaviour of our program.

Let’s treat this as an excuse to go wild, and add some limited local impurity.

We’re happy to do this, with the proviso that it doesn’t affect our ability to reason about our code as if it were pure.

// Memo2 is a case class that takes a function with two arguments of type A and B

// it contains a mutable map

// Memo2 can be used as a function

// when it is, it looks up the value in the map

// and calculates it if it's missing

// This is in many respects similar to our `memoize` function, from the `State` code.

case class Memo2[A,B,R](f: Function2[A, B, R]) extends Function2[A, B, R] {

import scala.collection.mutable

private val cache = mutable.Map.empty[(A, B), R]

def apply(x: A, y: B) = cache.getOrElseUpdate((x, y), f(x, y))

}

object Memo2Change {

val noCoins = Some(List.empty[Int])

val failure = None

// find fewest coins is a "simple" depth first search

// but it's memoized using Memo2

val findFewestCoins: Function2[Int, List[Int], Option[List[Int]]] =

Memo2[Int, List[Int], Option[List[Int]]]{

case (0, _) => noCoins

case (_, Nil) => failure

case (t, _) if t < 0 => failure

case (target, coins@h::tail) => {

lazy val usingFirstCoin = findFewestCoins(target - h, coins).map(h::_)

lazy val notUsingFirstCoin = findFewestCoins(target, tail)

List(usingFirstCoin, notUsingFirstCoin).flatten.minByOption(_.length)

}

}

}This was the example that I showed to my colleagues. I think it’s the most understandable solution that I’m going to come up with.

There are some caveats around this, for instance, access to the map used in Memo2 isn’t thread safe.

Recursion Schemes

I’m a big believer in occasionally going in the opposite direction, and writing “deliberately fancy code”.

There are a handful of reasons for this, first and foremost, it’s loads of fun.

But even from a pragmatic perspective, there’s a specific category of really weird bugs that you hardly ever see in the course of normal development, but that somehow, beginners are really good at running into. I find that deliberately challenging yourself to use obscure techniques is the fastest way to encounter, and hence understand, those bugs.

For example, while writing this recent blog post that I ran into a bug in the Scala Compiler and learnt about the workaround. This is far more relaxing than being stuck, frantically Googling, in the middle of a pair programming session.

With that said, this is probably a good place to stop reading. Recursion schemes are a large and complex topic, and I’m about to butcher the task of explaining them. This isn’t entirely my fault, the type of Recursion Scheme required to solve “Change”, a histomorphism, is one of the more obscure ones. And unlike other posts about Recursion Schemes, I’m not going to build up to it.

If you want to learn more about Recursion Schemes, I can recommend any of these articles, although the standard Haskell warning applies to the last one.

Recursion Scheme code operates over “Fixed Point Types”.

If you’ve ever tried and failed to construct a recursive Option[_] that was options all the way down

Option[Option[Option[Option[Option[Option[Option[Option[Option[Option[Option[Option[...

Fixed point types let you get close to that, by adding a layer of indirection:

case class Fix[F[_]](unfix: F[Fix[F]])

I’m going to refer to the type argument F in that definition, as the Functor.

Because using a fix point type in a recursion scheme relies on F having a Functor instance; it needs to be something that you can map over.

This let’s you construct values which are very similar to the impossible recursive Option, such as:

Fix[Option](Some(Fix[Option](Some(Fix[Option](None)))))

The fix point of Option is equivalent to a “Natural Number” (an integer that’s either positive or zero).

We can see equivalence, by thinking of None as representing zero, and Some(Fix(x)) as representing x + 1.

Recursion scheme code let’s us write “Morphisms” which take Algebras and Co-Algebras written in terms of the Functor F, and convert them into folds and unfolds over the fixed point version of that functor.

We’re going to use a couple of Recursion schemes below.

The first, and simplest of these is natAlgebra, which takes an Algebra[Option, Int] and produces a function that folds up a Fix[Option] returning the depth of the Option

An Algebra[F[_], A] is literally just a function F[A] => A so we can think of our natAlgebra as a function Option[Int] => Int.

The recursion scheme doing the folding is called a “catamorphism”.

The important thing to bear in mind about the natAlgebra function, is that it doesn’t involve any recursion.

The recursive part of applying an Algebra to a Fixed Point type is abstracted away by the Recursion Scheme.

(We’re using an implementation of recursion schemes from a library called Droste.)

object RecursionSchemeChange {

import higherkindness.droste._

import higherkindness.droste.data._

import higherkindness.droste.data.prelude._

import cats.implicits._

def natAlgebra: Algebra[Option, Int] = Algebra[Option, Int]{

case Some(n) => n+1

case None => 0

}

def toInt[A](x: Attr[Option, A]): Int = scheme.cata(natAlgebra).apply(x.forget)We’re also doing the exact opposite, and using natCoalgebra to convert a (positive) Int to a Fixed Point Option.

In the same way that the algebra is essentially just a function Option[Int] => Int, this is the opposite; a function Int => Option[Int]

A recursion scheme that takes a co-algebra is called an anamorphism.

val natCoalgebra: Coalgebra[Option, Int] =

Coalgebra(n => if (n > 0) Some(n - 1) else None)Next comes the core of the recursion scheme solution.

We’re going to phrase the change problem as CVAlgebra.

This is like similar to the Algebra used in natAlgebra.

Except that instead of operating on an Option[Int], we’re operating on an Option[Attr[Option, A]].

A is our result type, Option[List[Int]].

Attr is another Fixed Point type, loosely defined as:

case class Attr[F[_], A](attribute: A, hole: F[Attr[F, A]])

The purpose of the hole variable, is that once we lift this CVAlgebra using a recursion scheme called a “histomorphism”,

the hole will contain the results of “smaller” values in the recursion.

We can use this like the cache in any of the previous examples.

// Lookup a value from the recursion scheme "history"

// "i" is the number of "layers" to go back

// so if you're currently computing a value for 10 `lookup(4, attr)`

// will be the value computed for 6

def lookup[A](i: Int, v: Option[Attr[Option, A]]): Option[A] = (i, v) match {

case (0, Some(Attr(a, _))) => Some(a)

case (n, Some(Attr(_, tail))) => lookup(n - 1, tail)

case (_, None) => None

}Unlike the previous implementation of findFewestCoins, our implementation as a CVAlgebra closes over the list of coins.

We use the same list of coins at every point in the search tree.

And instead of asking “do we use the first coin or not”, at each level, we ask, “which of these coins do we use next”.

Finally we combine the CVAlgebra with our natCoalgebra, forming a “hylomorphism”.

A hylomorphism is a recursion scheme that combines a histomorphism, and an anamorphism.

The anamorphism is just responsible for converting from the amount as an Int, to the “Fixed Point of Option” form.

An advantage to chaining recursion schemes together like this is we don’t have to construct the entire Fixed Point object in memory at once. (That might be hearsay, I’ve not peaked at the internals of Droste).

def findFewestCoins(amount: Int, coins: List[Int]): Option[List[Int]] = {

val coinAlgebra: CVAlgebra[Option, Option[List[Int]]] = CVAlgebra {

// this is our base case, corresponding to a value of zero

case None => Some(List.empty[Int])

// this is our recursive case, where the value is positive

case cur@Some(next) => {

val toProcess = coins.filter(_ <= 1 + toInt(next))

val processedResults: List[List[Int]] = toProcess.map{ coin =>

lookup(coin-1, cur) // lookup the values in the history

.flatten // flatten: to combine 2 layers of `Option`

.map(coin :: _) // then add the current coin to the prior result

}.flatten // and flatten, removing `None`s, coins that can't appear here

processedResults.minByOption(_.length)

}

}

val f = scheme.ghylo(

coinAlgebra.gather(Gather.histo),

natCoalgebra.scatter(Scatter.ana))

// Negative numbers can't be represented as a Nat

// So filter them out first

Some(amount).filter(_ >= 0).flatMap(f)

}

}As mentioned above, the Recursion Scheme solution introduces a lot of concepts at once.

Hopefully, if you’ve read this far, and you take one thing home from it, it will be the following.

Recursion schemes let you write a non recursive Algebra, and then lift this into a recursive function.

I might not get to use this a lot, but I think that it’s incredibly cool that it’s even possible.

Appendix

This blog post is Literate Scala, view the raw source-code here.

If you’re trying to run it, you may find the build.sbt file containing the specific version of the dependencies to be valuable.

The build.sbt has the following contents:

scalaVersion := "2.13.3"

addCompilerPlugin("com.olegpy" %% "better-monadic-for" % "0.3.1")

libraryDependencies += "org.scalatest" %% "scalatest" % "3.2.0" % "test"

libraryDependencies += "org.typelevel" %% "cats-core" % "2.0.0"

libraryDependencies += "io.higherkindness" %% "droste-core" % "0.8.0"